The Challenge With Benchmarking for Seed Selection

Benchmarking how a farmer’s operation performs can be useful, but it can also be wrong.

In agriculture where many fields appear similar from the road, more farmers are looking for ways to compare their operations against their neighbors, their region, or state. This idea of comparing a farmer’s operation to others has become known as “benchmarking,” and is touted by some digital ag companies as a way to gain useful insight into seed performance. But these comparisons typically offer simple yield averages across fields and gloss over all of the differences that exist between fields and operations. Benchmarking misses the bigger, precision opportunity for digital farming.

A Confusing Result

The typical situation I’ve seen starts with an early-adopter farmer adding digital farming tools to their operation. They experience how digital tools improve their operation and profitability — they become a believer.

But when it comes to seed benchmarking, even these digital trailblazers get confused.

Like a majority of farmers using digital farm management software, these farmers often run split planting trials. It’s a story I’ve heard more than once, “I have these two hybrids I’m looking at on my farm. The benchmark from a digital provider says Hybrid B is the winner to pick. But when I dig into my side-by-side data, Hybrid A comes out on top. Isn’t all this digital farming data supposed to line up?”

Breaking Down Benchmarks

It’s helpful to understand what benchmarks are really measuring when it comes to seed performance. Benchmarks are broad roll-ups of crop performance across a large variety of farming practices and environments; whether that’s on one operation, in a region, or globally for that specific seed. This basic analysis of averages doesn’t give farmers an accurate look at the best genetic performers for their operation or fields. It favors seeds planted in high yielding fields and environments. What’s more, a lot of “big data” tools actually have very limited data, and in some of the worst cases I see, benchmarking results are based on just a few fields and rarely across years. Don’t let the digital interface trick you into believing limited data.

So let’s go back to our example farm. Yield is influenced by a host of factors beyond genetics, like soil composition and organic matter, water availability, weather, fertility and crop protection application, crop rotation, tillage, use of cover crops, planting downforce, etc. When farmers account for all that variability — often through split planting trials — Hybrid A emerges as the true winner.

But why the conflicting benchmark result? Hybrid A is the primary seed, and gets on a lot more acres than Hybrid B. Hybrid B was placed on fields that had a more favorable yield production season compared to the broad set of fields Hybrid A was planted on. When it came time to summarize the harvest performance, Hybrid B got a yield lift from the weather and favorable soil conditions. The yield results are accurate, but it’s not measuring seed genetics performance, and it’s a poor estimate of future performance ranking.

Benchmarks Fall Short in Predicting Seed Performance

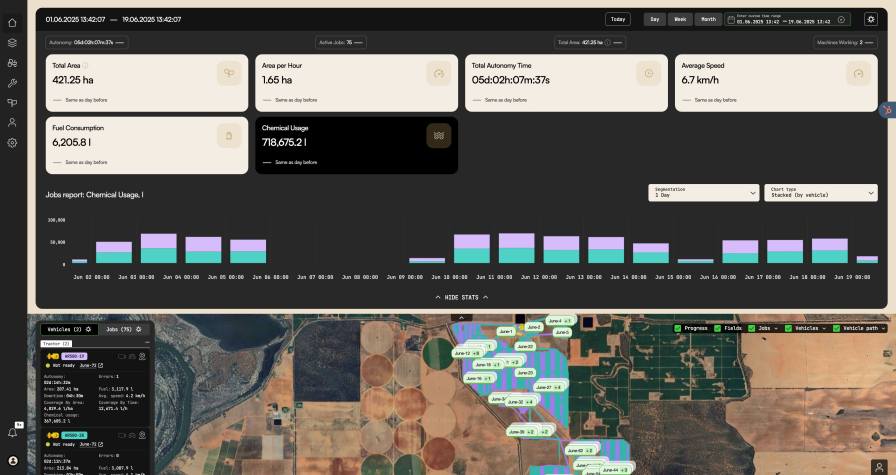

This experience got me thinking even more about industry seed benchmarks and their correlation — or lack thereof — to on-farm performance, specifically split-planting trials. My team just completed a first-of-its-kind analysis comparing seed performance between benchmark and split-planting data based on Climate FieldView data.

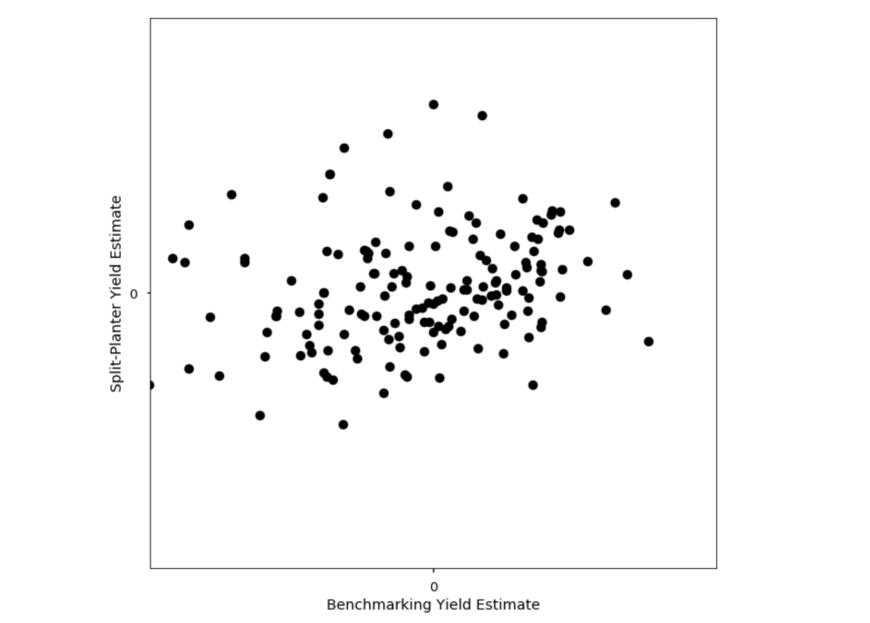

We analyzed data for 168 hybrids from 18 seed brands across more than 1.5 million acres in 2018. Hot off the press, we found a poor relationship between benchmark yield data and split-planting trial results! The scatter plot shows hybrids plotted literally all over the map.

Plot of split-planting yield estimate against benchmarking yield estimate for 168 hybrids across 1.5 million acres in 2018.

This indicates that seed benchmark data is not a good predictor of in-field performance, nor is it an effective guide for seed selection. At least, not yet. There are simply too many interdependent agronomic and weather factors at work in each field. Add human bias to the mix — the best seeds get the best acre — and what farmers end up with is that slick digital interface with pretty charts and tables that tell you little — or worse — point you in the wrong direction, in terms of actionable seed insights.

There’s a Better Way

When I’m out talking with farmers, they tell me they want grounded, data-based insights on what worked (and what will work) on their fields. They want valid estimates of how every aspect of agronomic management affected yield. Which hybrid performed better, did the tillage system or downforce units make a difference? Did the fertility or fungicide they applied deliver positive return on their investment? What worked better, 20 inch or 30 inch rows? And did that biological application provide any lift?

If farmers want to find the best seed for their best acre and the best seed for their toughest acre, they need more analytics power driving their digital seed recommendation. Building systems to help them find the right seed for every corner of their operation is something we’re deeply invested in and working on right now. Tools like our recently launched Seed Advisor are the start and we’re testing next generation products in our research fields and with farmers this season.

Better Benchmarking

To be clear, it’s not that the idea of benchmarking is wrong — we all want to know what seed will perform best on each of our fields. But the current promises most benchmarking tools make fail to live up to expectations because predicting what seed will perform best is confounded by many variables that benchmarking tools cannot accurately address.

Here’s my personal bet. I believe hiding between the current benchmark yield averages and split-planting trial yield — that murky place of “poor correlation” — is a complex web of agronomic rules that no one human mind can comprehend. Computers, on the other hand, might be able to parse it out. We’re using deep learning to investigate these relationships right now, and with some luck, the resulting AI tech will land on fields in the future.

In the meantime, my advice to farmers is the same that I give to my brothers: put their benchmarks in context, run split-planting trials, and get to know their farming data. The best solutions for farmers are going to come through customized insights rooted in their data, not by looking over their shoulder at everyone else’s.

A Note to Industry

I urge industry leaders take seriously our roles as stewards of digital farming innovation, and to commit to delivering clear, grounded information to promote technology adoption and farmer success. Responsible innovation and product marketing are essential as we bolster this quickly evolving digital farming capability. This fall when the harvesters are running, let’s urge farmers to look deeper at their data, not broadly at the crowd. The herd is often on the right path, but rarely smart. Let’s focus on helping each farmer using digital tools to up their operation’s IQ, and not insult them with superficial analytics.